Scale ComfyUI workflows with serverless APIs

Turn any ComfyUI workflow into a production-ready API in minutes. Bulk-generate assets, power your internal tools, or back your own apps without managing infrastructure.

1view_comfy_api_url = "<Your_ViewComfy_endpoint>"

2client_id = "<Your_ViewComfy_client_id>"

3client_secret = "<Your_ViewComfy_client_secret>"

4

5# Set parameters

6params["6-inputs-text"] = "A cat sorcerer"

7params["52-inputs-image"] = open("inputs/img.png", "rb")

8

9# Call the API and get the logs of the execution in real time

10prompt_result = await infer_with_logs(

11 api_url=view_comfy_api_url,

12 params=params,

13 logging_callback=logging_callback,

14 client_id=client_id,

15 client_secret=client_secret,

16)

17From ComfyUI workflow to API in minutes

ViewComfy takes the workflows you already have in ComfyUI and turns them into secure, autoscaling APIs. No custom backend or DevOps needed.

Deploy any Comfy workflow

Pick your ComfyUI workflow, choose your GPU, and create an API in a few clicks.

Update your APIs without downtime

Swap the workflow file or change the GPU model while keeping the endpoints the same.

Fast cold starts with memory snapshots

Cut down cold start times by half compared to typical deployments.

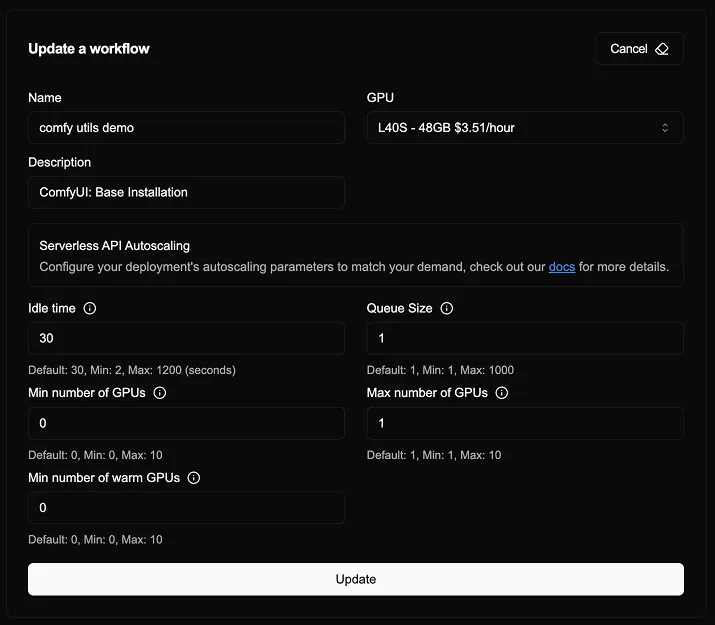

Easy to set up autoscaling

ViewComfy’s autoscaling makes sure your APIs can handle spikes in demand without you needing to manage servers, queues, or GPUs.

- Set how and when your API scales directly from the dashboard.

- Let your workflow run on multiple GPUs in parallel to keep queues short.

Works with any Comfy workflow and models

If it runs in ComfyUI, you can scale it with ViewComfy. Bring your own nodes, models and workflows, we take care of everything else.

Full ComfyUI flexibility - Deploy any ComfyUI workflow with any nodes or models.

Use private custom nodes and models - Bring your own private custom nodes and models for full flexibility.

SageAttention 2 templates ready to go - Use our pre-optimized SageAttention 2 templates to speed up your workflows by up to 5x.

Use closed-source models in your workflows - Use our optimized node pack for API deployment to call closed-source models.

Pick the right API endpoint for your use case

Whether you’re bulk-generating content, scheduling jobs, or powering a custom app, ViewComfy’s endpoints support a wide range of workloads.

Bulk generation

Send batches of prompts and generate large volumes of assets in one go.

Inference APIs

Call your workflow from any app, script, or tool using a simple REST API.

Real-time logs

Follow long-running jobs as they happen with real-time logs over WebSockets.

Retrieve results asynchronously

Kick off async jobs and fetch results later using a prompt or job ID.

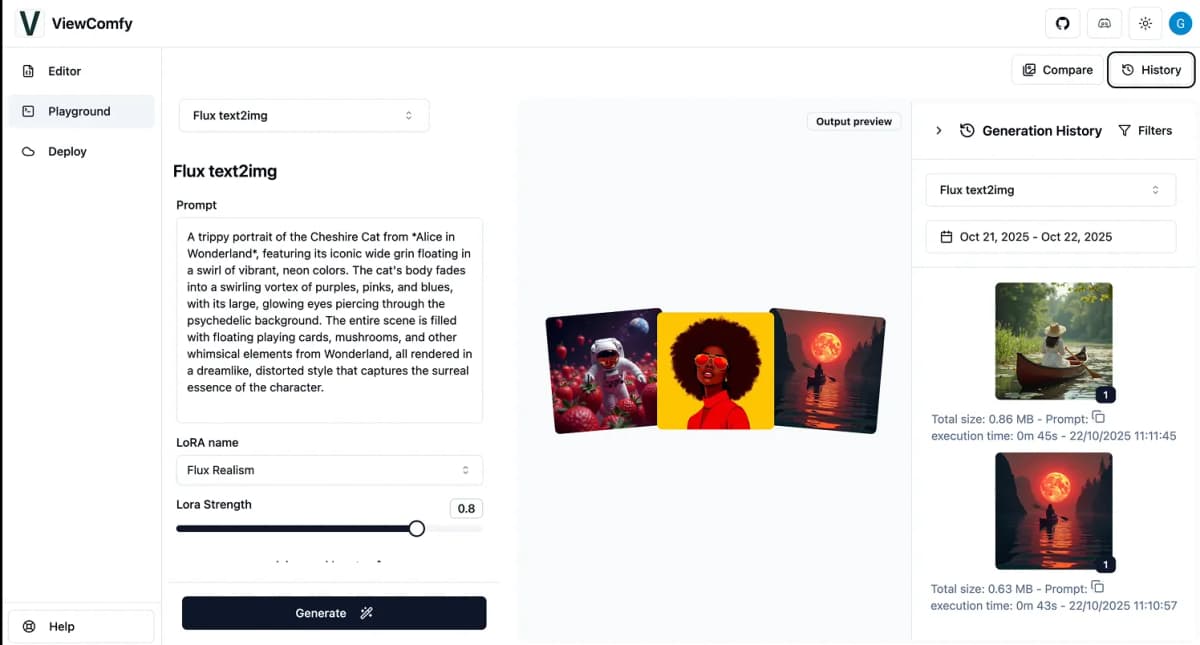

Turn your API into a shareable tool in minutes

Once your workflow is deployed as an API, you can use our no-code app builder to create a clean, shareable interface on top of it.

- Share your apps with teammates or clients so they can test workflows, review outputs, and give feedback.

- The app builder is open-source and can be run locally or self-hosted.

Observe, understand, and control your usage

Stay in control of how your APIs are used, how much they cost, and what they generate.

Easy access to logs

Inspect logs for each request to understand behavior and debug efficiently.

Analytics for usage and spend

Track performance, usage patterns, and costs at a glance.

Unlimited storage

Store all outputs and models with the option to connect your own S3 bucket.

Pick the hardware for your needs and upgrade it as your project grows.

| Model | Memory (VRAM) |

|---|---|

| T4 | 16 GB |

| L4 | 24 GB |

| A10G | 24 GB |

| L40S | 48 GB |

| A100-40GB | 40 GB |

| A100-80GB | 80 GB |

| H100 | 80 GB |

| CPU | Physical cores (Up to 8 Cores) |

| Memory | GB (Up to 32GB) |